Met Office are responsible for collecting and processing observation data, used to analyse the country’s weather and climate, at weather stations around the world and coast. The observations are valuable to several different consumers, from meteorologists forecasting the weather to climate scientists trying to predict global trends resulting from global warming.

Met Office have been looking to build a replacement observations platform that is more efficient and appropriate for their current needs. CACI have been working with Met Office for the last two years to deliver the next generation system: ‘SurfaceNet’, with the primary requirements being that it is cost effective and scalable.

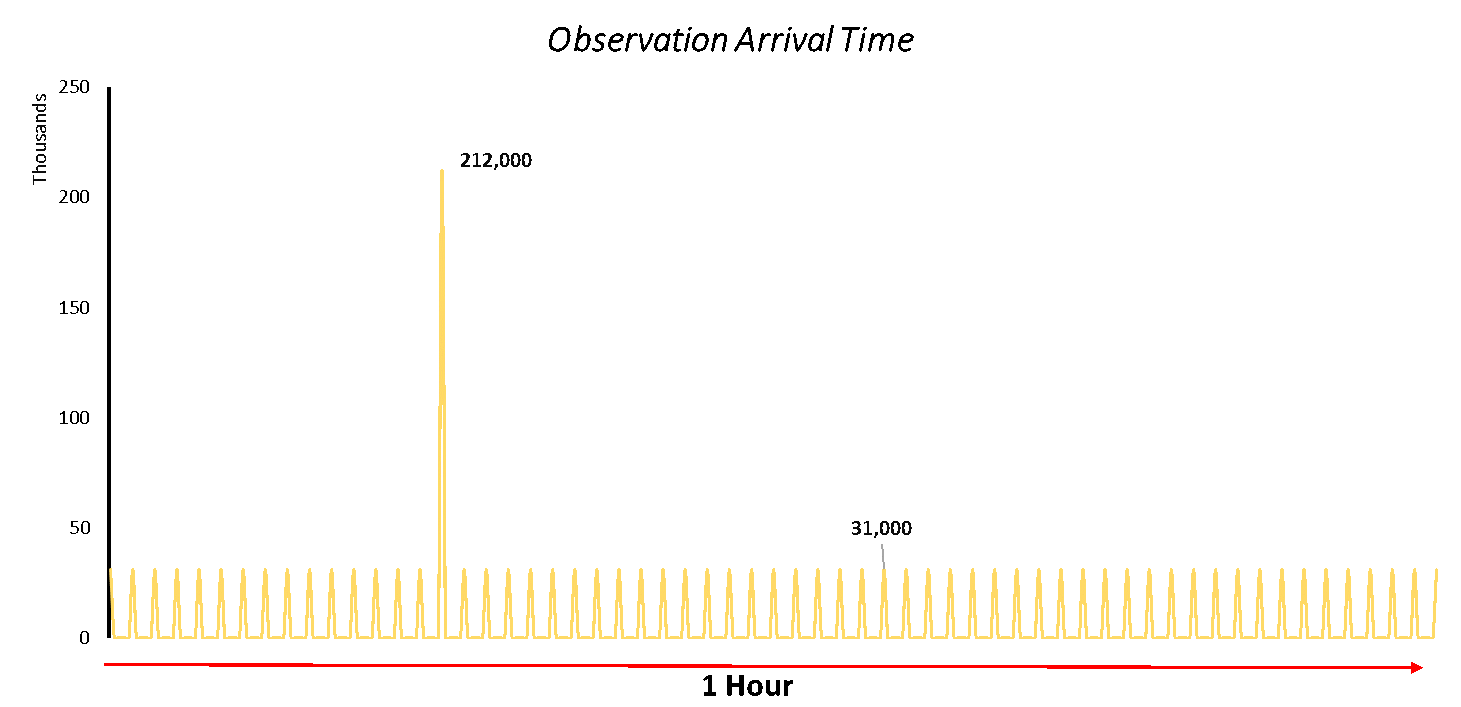

Given Met Office’s ethos of adopting a ‘Cloud First’ approach, and its partnership with AWS, it was an obvious choice to build the system in AWS’ Cloud. The first important decision was to select what would be our main compute resource. The observation data would be arriving once a minute and, given this spiky arrival time, Lambda proved to be the most cost-effective solution, allowing us to only pay for small periods where compute was required. The platform processes several observations from roughly 400 stations every minute – equating to 15 billion observations per month – so any marginal improvements on compute cost would soon add up.

Choosing Lambda complemented our desire to have a largely serverless system to minimise maintenance costs, using other serverless AWS resources such as S3, Aurora Serverless, DynamoDB and SQS. This approach avoided the need to provision and manage servers and the associated costs involved with this. Serverless resources are highly available by design; Aurora Serverless mandates at least two Availability Zones that the database is deployed into, while DynamoDB and S3 resources have their data intrinsically spread over multiple data centres.

Most of the data ingest occurs by remote data loggers communicating via MQTT with the platform; AWS IoT Core was the ideal resource for managing this. Using API gateway, we developed a simple API on top of IoT Core allowing those administering the system to onboard new loggers, manage their certificates and monitor their statuses. The Simple Email Service (SES) allows ingestion of data from marine buoys and ships that transmit their data via Iridium Satellite. Both IoT Core and SES are fully managed by AWS, supplying an easy method of handling data from a range of protocols with minimal operational management.

From a development perspective, the stand-out benefit of working in the cloud has been having the ability to deploy fully representative environments to test against. Our infrastructure is defined using CloudFormation, enabling each developer to stand up their own copy of the system when adding a new feature. Eliminating the classic ‘works on my machine’ problems that plague local development allowed for rapid iteration cycles and far fewer bugs during testing. The process means constantly exercising the ability to deploy the system from scratch, which will come in handy when an unforeseen problem occurs in the future.

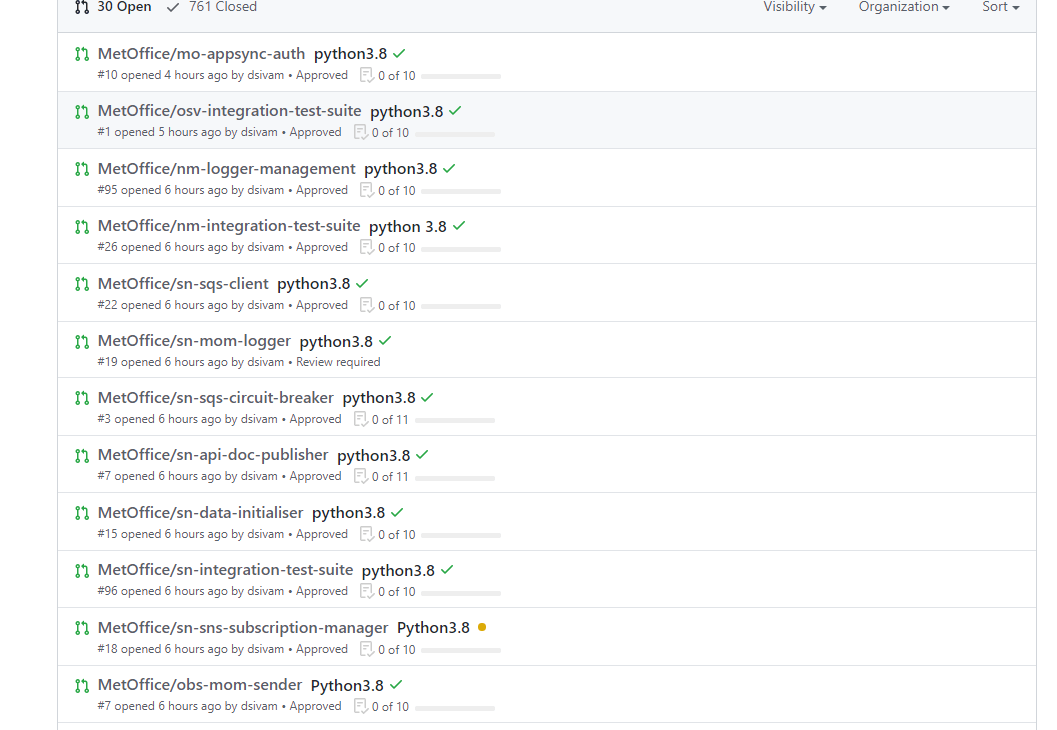

Whilst this suggests a flawless venture into the Cloud sector, the journey hasn’t been without problems. CloudFormation has been incredibly useful, but given the scale and the number of resources, it has become cumbersome. Despite our best mitigation efforts there is still a large amount of repetition, and the cumulative lines of YAML we have committed is on par with the number of lines of python. We would consider using the newer AWS CDK if we were to approach the project again. Additionally, we started off making new repositories for each new Lambda, but this has ended up limiting our ability to share code effectively across components, not to mention having to update ~40 repositories when we want to update buildspecs to use a new version of python.

It has been a fascinating couple of years and a main takeaway has been that large organisations such as Met Office, with large-scale bespoke data problems, see the cloud as a desired environment for building solutions. The maturity of the AWS platform has shown the cloud to be both robust and cheap enough to satisfy the requirements of complex systems, such as SurfaceNet, and will certainly play a big part in the future of both CACI and the Met Office.